Abstract

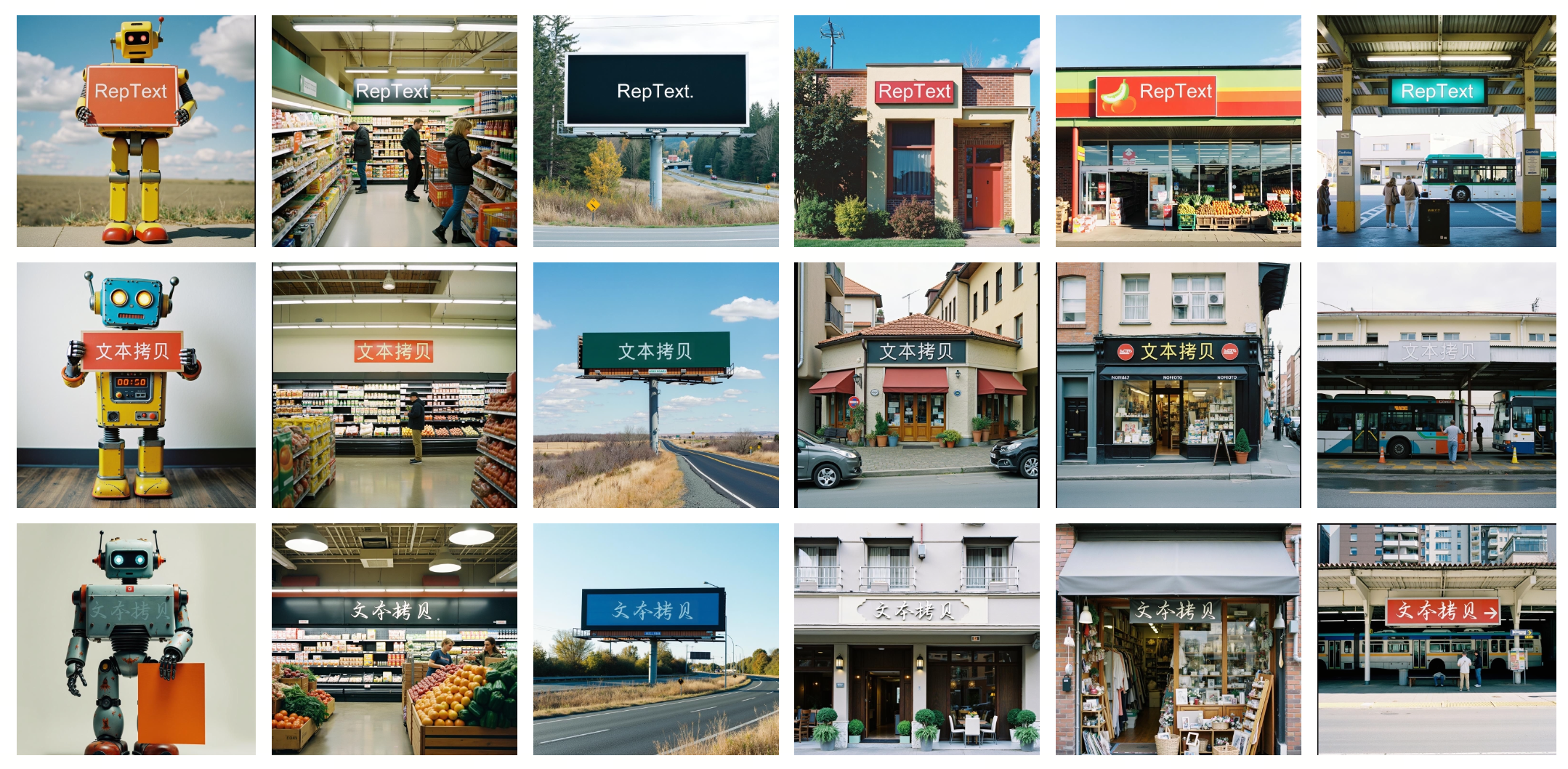

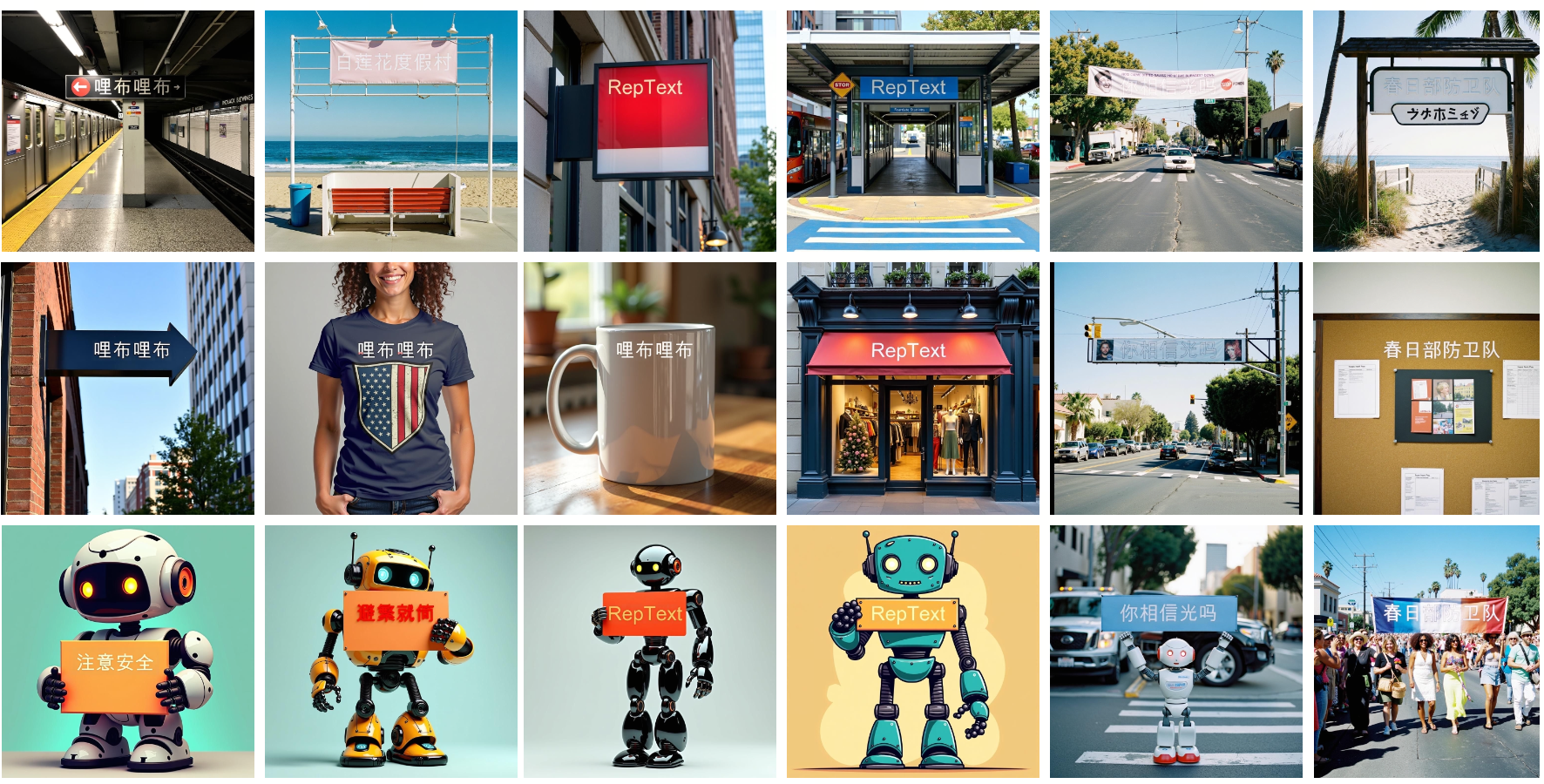

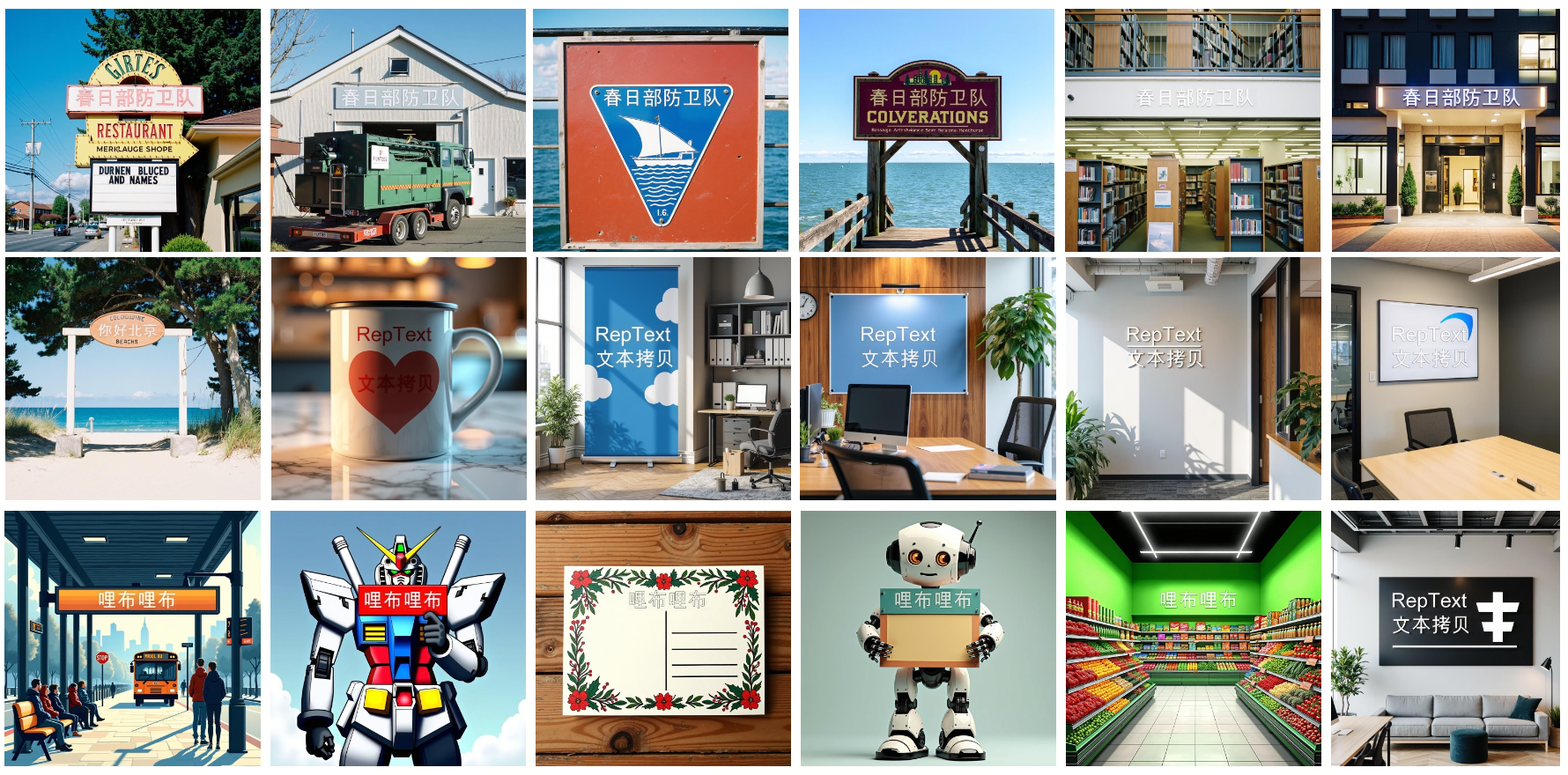

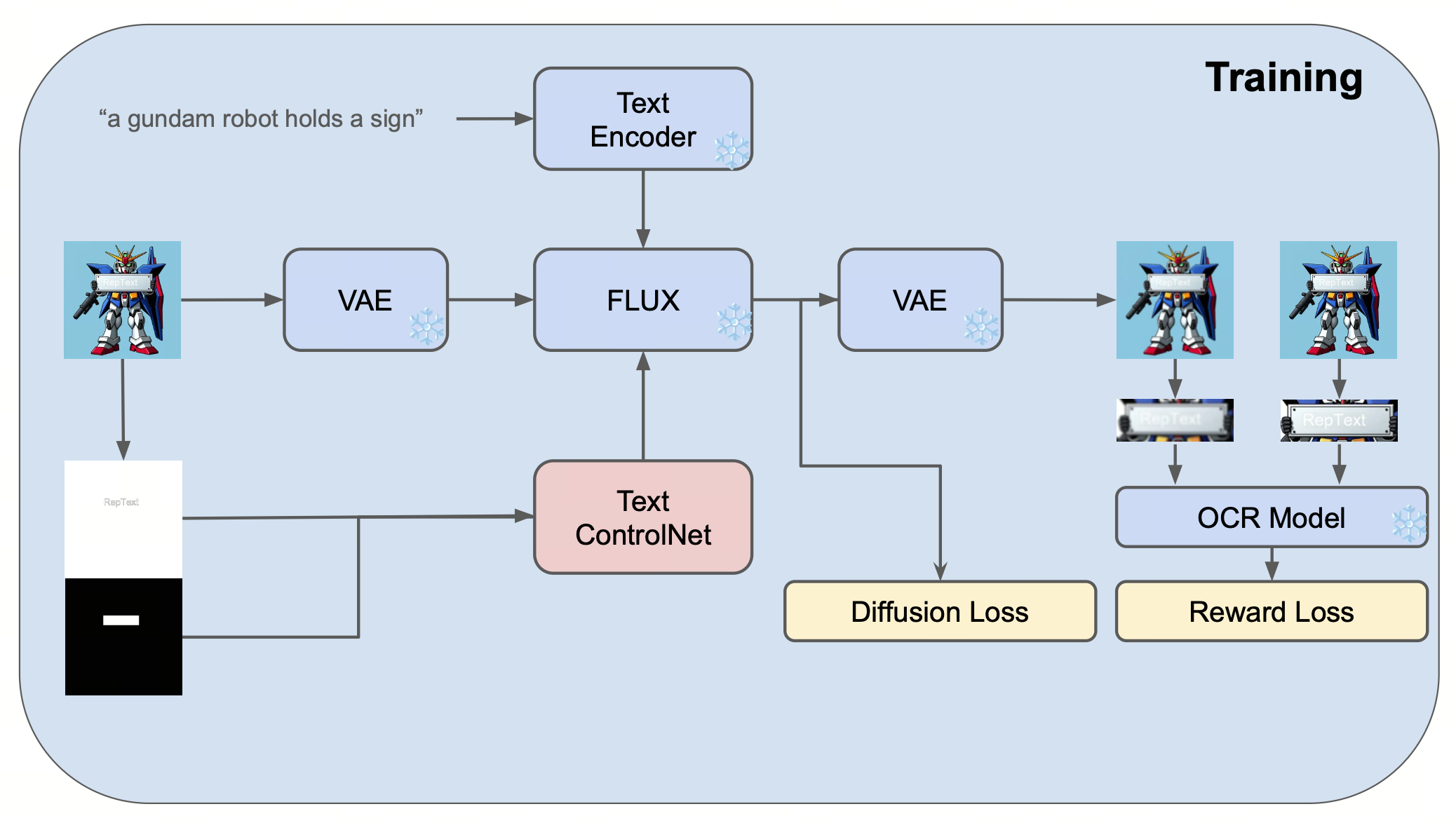

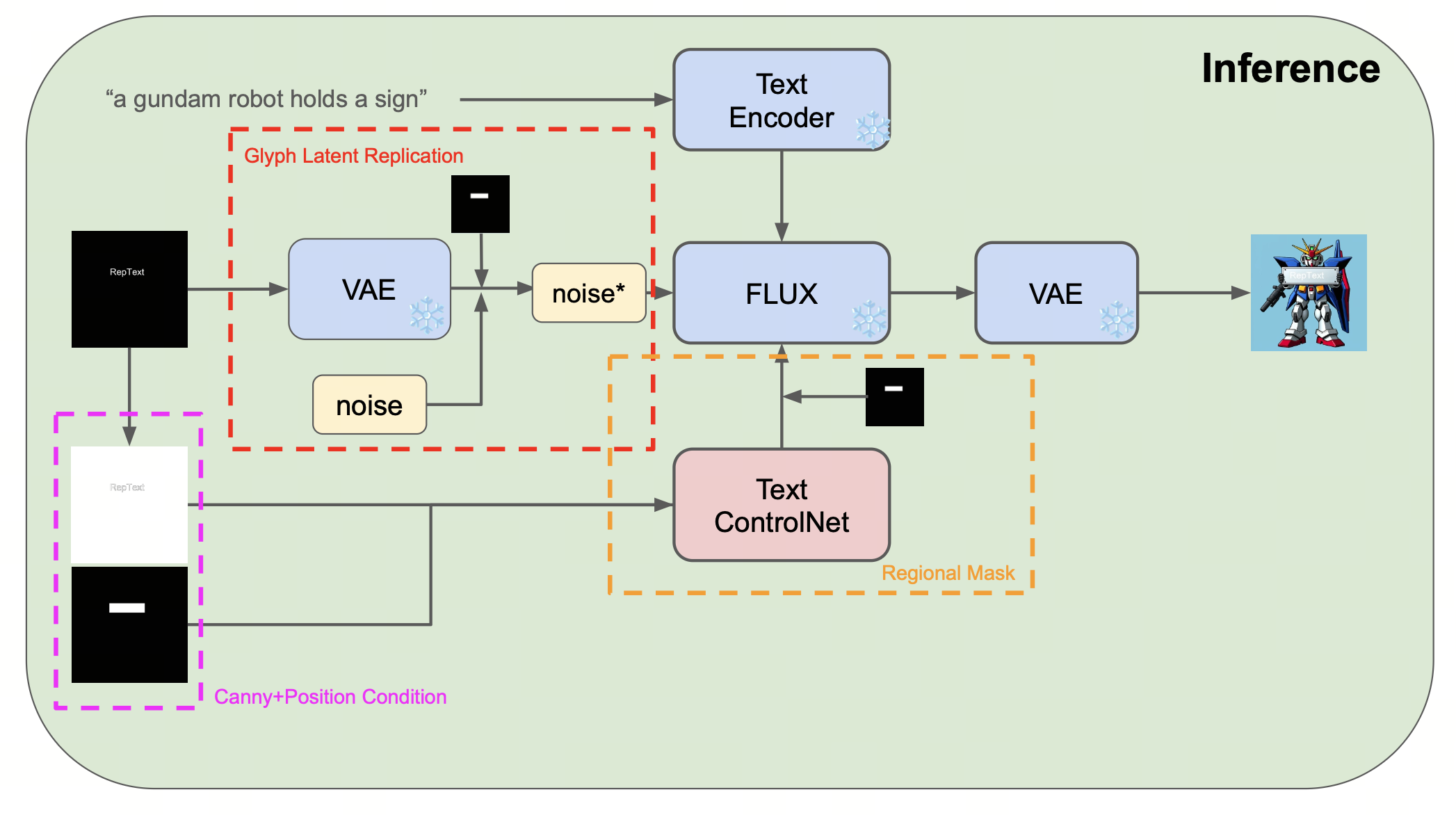

Although contemporary text-to-image generation models have achieved remarkable breakthroughs in producing visually appealing images, their capacity to generate precise and flexible typographic elements, especially non-Latin alphabets, remains constrained. This inherent limitation mainly stems from the fact that text encoders cannot effectively handle multilingual inputs or the biased distribution of multilin- gual data in the training set. In order to enable text rendering for specific language demands, some works adopt a dedicated text encoder or multilingual large lan- guage models to replace existing monolingual encoders, and retrain the model from scratch to enrich the base model with native rendering capabilities, but inevitably suffer from high resource consumption. The other works usually leverage auxiliary modules to encode text and glyphs while keeping the base model intact for contro- lable rendering. However, existing works are mostly built for UNet-based models instead of recent DiT-based models (SD3.5, FLUX), which limits their overall generation quality. To address these limitations, we start from an naive assumption that text understanding is only a sufficient condition for text rendering, but not a necessary condition. Based on this, we present RepText, which aims to empower pre-trained monolingual text-to-image generation models with the ability to accu- rately render, or more precisely, replicate, multilingual visual text in user-specified fonts, without need to really understand them. Specifically, we adopt the setting from ControlNet and additionally integrate language agnostic glyph and position of rendered text to enable generating harmonized visual text, allowing users to customize text content, font and position on their needs. To improve accuracy, a text perceptual loss is employed along with the diffusion loss. Furthermore, to stabilize rendering process, at the inference phase, we directly initialize with noisy glyph latent instead of random initialization, and adopt region masks to restrict the feature injection to only the text region to avoid distortion of other regions. We conducted extensive experiments to verify the effectiveness of our RepText relative to existing works, our approach outperforms existing open-source methods and achieves comparable results to native multi-language closed-source models. To be more fair, we also exhaustively discuss its limitations at the end.

Method

RepText, which aims to achieve text rendering based on the latest monolingual base models by replicating glyphs. Specifically, instead of using additional image or text encoders to understand words, we teach the model to replicate glyph via employ a text ControlNet with canny and position images as conditions. Additionally, we innovatively introduce glyph latent replication in initialization to enhance text accuracy and support color control. Finally, a region masking scheme is adopted to ensure good generation quality and prevent the background area from being disturbed. In summary, our contributions are threefold: (1) We present RepText, an effective framework for controllable multilingual visual text rendering. (2) We innovatively introduce glyph latent replication to improve typographic accuracy and enable color control. Besides, a region mask is adopted for good visual fidelity without background interference. (3) Qualitative experiments show that our approach outperforms existing open-source methods and achieves comparable results to native multi-language closed-source models.

▶ Comparison with Previous Works

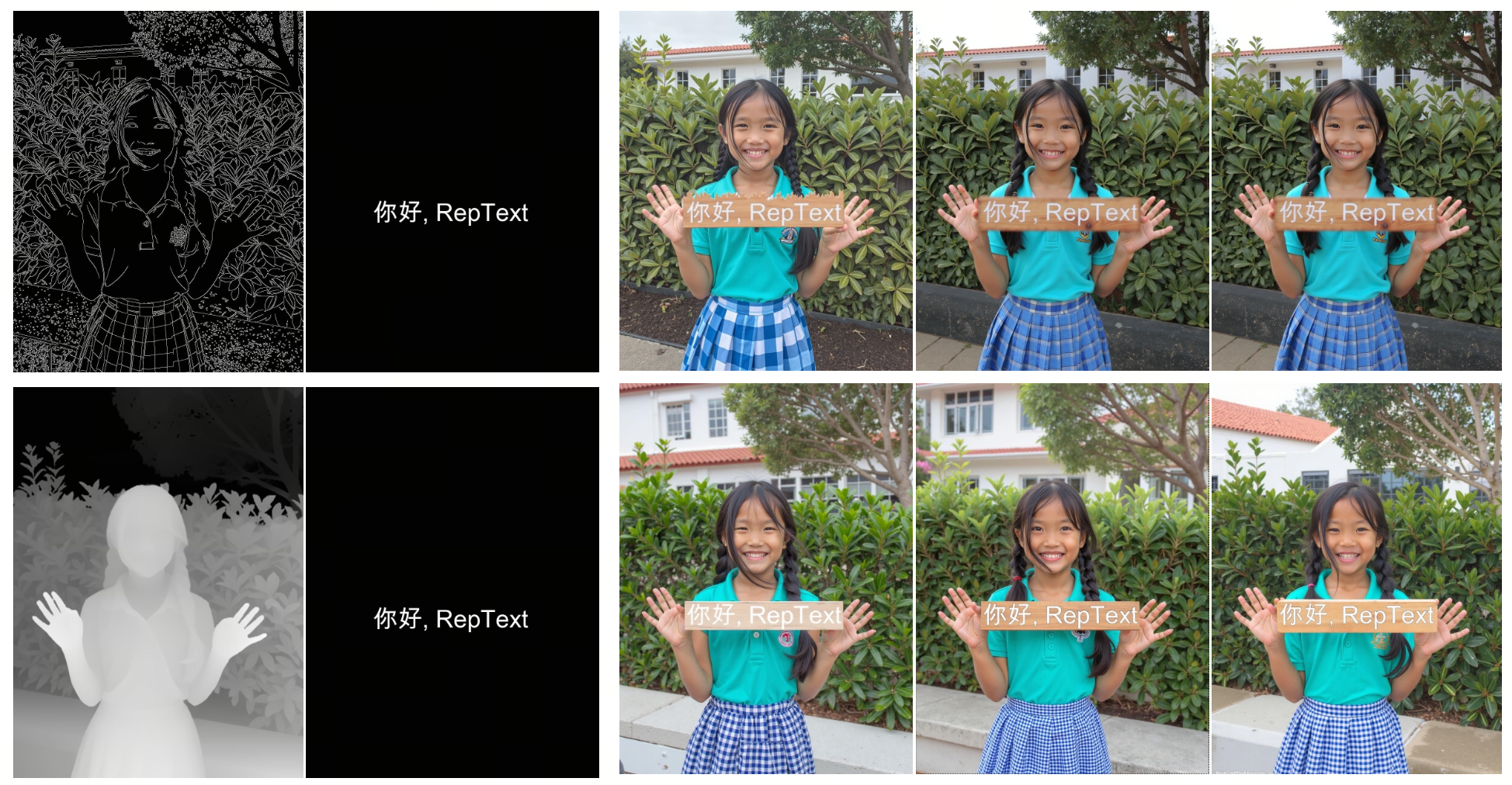

Compatibility to Other Works

BibTeX

@article{wang2025reptext,

title={RepText: Rendering Visual Text via Replicating},

author={Wang, Haofan and Xu, Yujia and Li, Yimeng and Li, Junchen and Zhang, Chaowei and Wang, Jing and Yang, Kejia and Chen, Zhibo},

journal={arXiv preprint arXiv:2504.19724},

year={2025}

}